Assessing EdCast's Information Architecture

Card Sorting • User Interviews • UX Research

Context

The EdCast LXP is a Learning Experience Platform, used by corporate and SMB units (Small and Midsize Business) to manage and deliver company training. The LXP brings together content, such as articles, courses, or videos, from multiple different sources and recommends it to learners/employees, based on multiple factors, such as their job roles, acquired skills, and learning preferences.

Corporate training can be vastly different depending on a company's learning culture. For that reason, the LXP was made highly customizable. However, this LXP was developed based on a collection of MVPs (minimal viable products). Consequently, over the years, multiple issues have been reported, among them the difficulty of navigating in the platform and finding information.

During Q3 2020, I was approached by the Product Team to help them understand what was causing these issues and what could be done to fix them. Naturally, navigation and information discovery can be affected by various different usability- and/or accessibility-related issues. However, among the aforementioned complaints were often references to the terminology used and concerns about the apparent fragmented nature of the platform. As a consequence, this made the platform's Information Architecture the primary suspect for investigation and the main target for our research. This page summarizes the process followed and the high-level results in this initiative.

Note: Due to the relative recency of this initiative and the fact that it deals with sensitive information about the product, that leadership stakeholders do not wish to see in public, several details had to be omitted. Thank you for your understanding.

Team

• 1 UX Analyst

Support provided by:

• 3 Product Managers

• 1 Customer Success Manager (CSM)

My Contributions

• UX Research. All stages, including:

- Review & Planning

- Experimental Design & Setup

- Recruitment & Execution

- Analysis & Report

Timeline

• Q3 2020

• ~ 6 weeks

Research Goals

Overall, the core goal of this research was: understand what might cause users to consider the LXP a platform hard to navigate and find information in. However, after identifying the platform's information architecture as our starting focus, we were able to refine our goals into:

(1) Understand how users think about the LXP - e.g.:

• What concepts do users believe to be related?

• What concepts do users associate with the LXP?

• What concepts do users believe to be the most important for them?

(2) Learn more about who is using the LXP - every experiment is an opportunity to learn more about one's users, especially when new information might be compared with stakeholders' assumptions.

Methodology

To address the Research Goals, mentioned above, I proposed the conduction of a study combining User Interviews with Card Sorting.

What is Card Sorting?

Card sorting is a method used to help design or evaluate the information architecture of a site ... participants organize topics into categories that make sense to them (Source: Usability.gov).

In other words, during a card sorting session, participants are given a series of cards, each representing a particular topic (such as a page, or a section within a page). Then, they are then asked to group those cards based on common criteria that they find relevant.

Procedure

With the help of some members from our Product and Customer Success Management teams, we approached some of our clients to purpose a partnership for this initiative. Those that accepted, contributed in the selection of potential participants from their organizations. Three key criteria were used for the recruitment of participants, namely:

• Must have used the LXP in the past month;

• Has a maximum of 1 year of exposure to the LXP;

• Must not be a Product person nor a Designer (the goal was to understand "how users think", NOT "what users assume others think").

As a result, a total of 15 participants from 3 companies volunteered for this study (minimum # recommended for this type of study - Source: NNGroup).

Participants took part in the study via remote screen-sharing, with conferencing tools such as MS Teams and Google Meet, depending on their own company policies. Each participant took part in the session individually and were at a location of their choice.

Before the session, the moderator briefed the participants on the purpose and what they would help evaluating in this study. It was important to assure them that the participants were NOT being tested and that there were no right nor wrong answers. Participants were also asked for their consent to record the sessions, for data logging purposes.

Each session was composed of 3 main stages, with a total duration of approximately 40-60 minutes, depending on the participant.

The first stage consisted of a series of background and general experience questions, to collect more information about our users and validate the recruitment phase. This stage included questions such as:

• Tell me about yourself and your relationship to {Company Name}.

• What are the most common things (or tasks) that you do with the LXP?

• How would you describe your experience using the current LXP?

• What are the most (positive | negative) aspects of the LXP, if any?

The second stage consisted of the aforementioned Card Sorting task. This task followed an Open Card Sorting format, where participants had complete freedom in the number and types of groups being created. Participants had a total of 37 cards to sort, each one based on the most common locations and page sections within the LXP. To avoid influencing participants, particular attention was taken when labeling these cards, by using different terms from those known in the platform and avoiding repeated terminology.

Before the task, each participant was given instructions on what would be expected of them for this task, and on how to use the platform for the task (Miro). They were encouraged to ask any questions and to "think aloud", as this method often helps revealing potential opportunities for improvement. There was no time limit associated with this stage.

The final stage of the session consisted of a series of closing questions, to understand factors such as:

• How participants see the overall pattern/structure around the groups they created;

• If any of the groups was difficult for them to create;

• If any of the cards were difficult for them to put into a group;

At the end of the session, participants were also encouraged to share any comments they might have, both in regards to the topic of the session or even the session itself.

Before the Sessions

Running the Sessions

Results

Due to the recency of this research initiative and the (sensible) information involved, I will not be able to specify the exact results. However, below follows a summary of the process and some of the most relevant results.

Data

The aforementioned process helped to produce three main sets of data-results, namely:

• Comments and answers to the interview stages of the session, with information regarding the participants' and their overall platform usage;

• Comments, questions, and general notes from the card sorting task, including information about sequences of actions and rationale behind groups;

• Final grouped cards and the corresponding names/topics attributed to them from the card sorting task.

Card Sorting Patterns

To gather insights from the groups created by the participants, I started with a combination of thematic analysis and affinity mapping to identify the most common concepts and factors/reasons used by them to create said groups. At the end of this stage, it was possible to identify 3 high-level themes:

• Learning Content & Activities - Related to what the user does and learns on the platform. Important considerations include:

• Who recommends the content (e.g., AI, Manager, Self);

• When is the content to be used (i.e., planned content, already consumed content);

• What type of content is to be consumed;

• Organization - Related to company information. Some different approaches were found:

• Competitive - focus on comparing users;

• Neutral / Informational - focus on learning about one's organization

• Social - focus on the interaction between users;

• User - Related to the end user's general information and impact on the platform. This theme was expressed as:

• Contributions - to content or a team;

• Characterization - including "personal" information about the user;

• Settings - "personal" configurations.

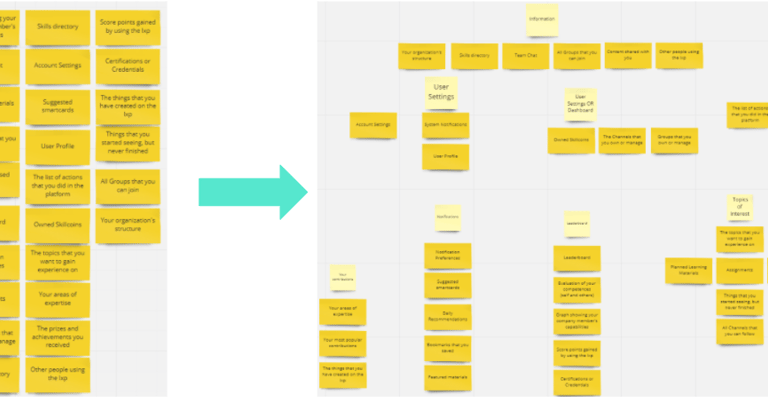

Based on these three main themes and their dimensions, participant-created groups were "combined" to identify which of the original cards/topics would be more likely to be together (blurred image below for data-sensitive purposes).

Following this, a quantitative analysis was conducted, by using R statistics to calculate hierarchical clusters, based on the participant-created groups. This allows us to verify which cards (and groups of cards) were most frequently grouped together (blurred image below for data-sensitive purposes).

Both qualitative and quantitative identified groups were then compared with the current information architecture of the LXP platform, to identify relevant insights and opportunities for improvement.

User Types & Behaviors

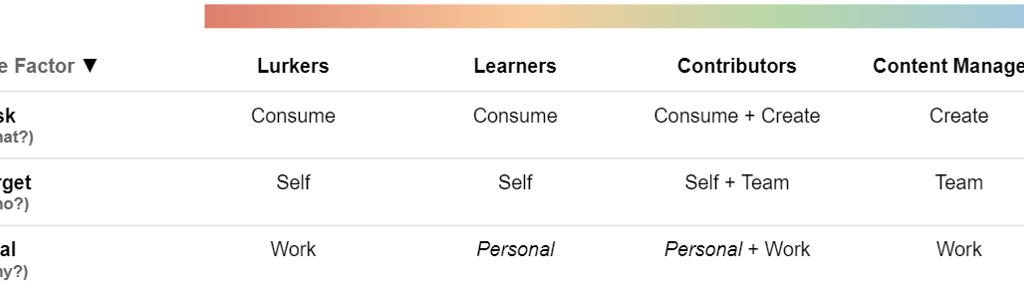

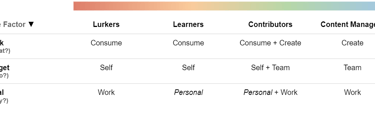

Based on the data acquired from the interviewing stages of the session and, once again, based on thematic analysis and affinity mapping methodologies, I was able to identify 3 main dimensions that can be used used to classify the participants of this study - thus, potentially also platform users:

• Task (What the user does on the LXP): Consume vs Create content.

• Target (Who benefits from the user's task): The User vs the Team/Organization.

• Goal (Why use the LXP): Work- vs Personal-reasons.

Based on the above, it was possible to further identify 4 main groups of users:

• Lurkers - They use the LXP to consume content, for themselves, but in the context of their work - e.g., complete assignments, stay informed about what happens in the company;

• Learners - They use the LXP to consume content, for themselves, to learn something new or improve on existing knowledge - e.g., develop a new skill;

• Contributors ("the ideal user") - They use the LXP to both consume and share content. They are interested in self-improvement and share their own knowledge with their team;

• Content Managers - They use the LXP to share content with their team, purely in the context of their work - e.g., create a guide on how to use one of the company's tools.

Reporting the Results

Combining the results described above with the subjective feedback provided by the participants, I proceeded to extract and summarize the key insights to present internally. Once again, due to the sensitivity of this information, I am not allowed to go into details, however, this exercise revealed:

• The importance of planned learning and activities, not fully represented by the platform;

• That users interpret important concepts in the platform in a significantly different way from the one originally defined;

• A lack of clarity around certain actions, consequences, and general elements in the platform;

• Bonus: UX pain-points - as participants would describe their groups, some would mention their challenges with specific features.

These were reported in 2 main formats:

• Stakeholder presentations: namely meetings supported by presentation slides summarizing the study, results, and relevant resources;

• Dedicated project page (on Confluence), visible to the entire organization. This includes all resources used in this study, such as planning documents, study guides, documents used for the analysis, and summarization of the results.

Outcomes and Takeaways

With this research initiative, we were able to better understand who is currently using the LXP platform, how they think about it, and how well the LXP reflects that.

The results of this research fostered significant internal discussions, especially within our Product Team, resulting in the beginning of strategic UX-fixes all across the platform. Furthermore, these became also extremely important in multiple design initiatives. By the time of this project and the writing of this page, the LXP is under a significant redesign - not too different from the projects described on the EdCast Marketplace page.

On the other hand, from a personal perspective, this project was particularly exciting as this was the first opportunity I had to directly interact with customers from a product line that I had only started to be involved with. This revealed also, to the Product Team, the opportunity for additional partnerships with customers for research-purposes, which, at the time of this writing, I am strongly involved with.